How ‘Rogue One’ Brought Back Characters From ‘A New Hope’, ‘Rebels’, And ‘The Clone Wars’

Rogue One is set in the preceding days before the start of Star Wars: A New Hope, which required, among other things, the resurrection of characters that first made their appearance 39 years ago. In addition, the filmmakers included characters from the Prequels and the animated TV series, Star Wars: The Clone Wars. The result is a rich, nostalgic experience, which also expanded the Star Wars universe into new possibilities with new characters like the Rebel spy Cassian Andor, the heroine Jyn Erso, and her father Galen Erso, who designed the Death Star. Watching these new characters interact with characters from A New Hope, Revenge of the Sith, and The Clone Wars helped make this entry the most satisfying Disney Star Wars film to date.

But it was also a major challenge for director Gareth Edwards and ILM. They used every tool in their toolkit to bring together characters from three different timelines in the Star Wars universe. The characters from A New Hope needed to be the same age as they were 39 years ago, while characters from the Prequels and The Clone Wars needed to have aged appropriately to fit into the timeline of Rogue One.

Re-casting Characters

Jimmy Smits reprised his role as Princess Leia’s adoptive father, Bail Organa.

Some characters were simply recast. General Dodonna, played by Alex McCrindle in 1977, was recast with Ian McElhinney in the part for Rogue One. Some characters returned to play the roles they had played previously. Jimmy Smits played Bail Organa in the Prequels and returned to play the role in Rogue One.

The part of Mon Mothma was both a recast and a return. Genevieve O’Reilly who had played the part in Revenge of the Sith returned to the role in Rogue One. But she had also replaced Caroline Blakiston who played Mon Mothma in Return of The Jedi.

Genevieve O’Reilly (left) returned to the role of Mon Mothma in Rogue One. Caroline Blakiston (right) played her originally in Return of the Jedi.

Archival Footage and Additional Dialogue

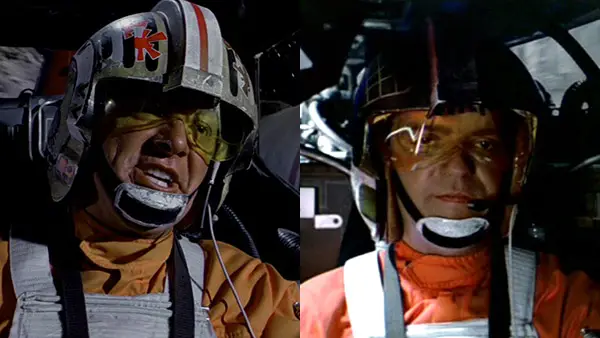

The characters of Red Leader and Gold Leader who played small but important roles during the Battle of Yavin in A New Hope were resurrected using outtakes from 1977 so they could make important and fan-pleasing cameos in the climactic Battle of Scariff in Rogue One. Original Gold Leader actor, Angus MacInnes, also voiced some new, off-camera lines for the battle.

In both cases, the negatives of the unused takes were scanned and cleaned up. However, the footage of Red Leader was slightly underexposed with no detail in the blacks in the areas around the actor (the cockpit interior), though the exposure on the actor was acceptable. ILM rotoscoped the actor off of an underexposed background, and comped him over a new CG X-Wing cockpit, with lighting to match the original footage from A New Hope.

Red Leader and Gold Leader

Archival footage of Gold Leader was cleaned up, color corrected, and corrected for fading. Then ILM combined Gold Leader’s archival footage with the CG X-Wing interior used to film actress Gabby Wong in Rogue One. Wong, who played an X-Wing pilot, was digitally removed, and footage of Gold Leader was composited in her place. Nobody noticed that Gold Leader was sitting in an X-Wing doubling as his Y-Wing. Gold Leader actor Angus MacInnes also recorded extra dialog off-camera, which can be heard over exterior shots of his Y-Wing.

So Red Leader is old footage with a CG background, and Gold Leader is old footage combined with new footage, with some new dialog recorded.

A New Hope in Creating Digital Humans

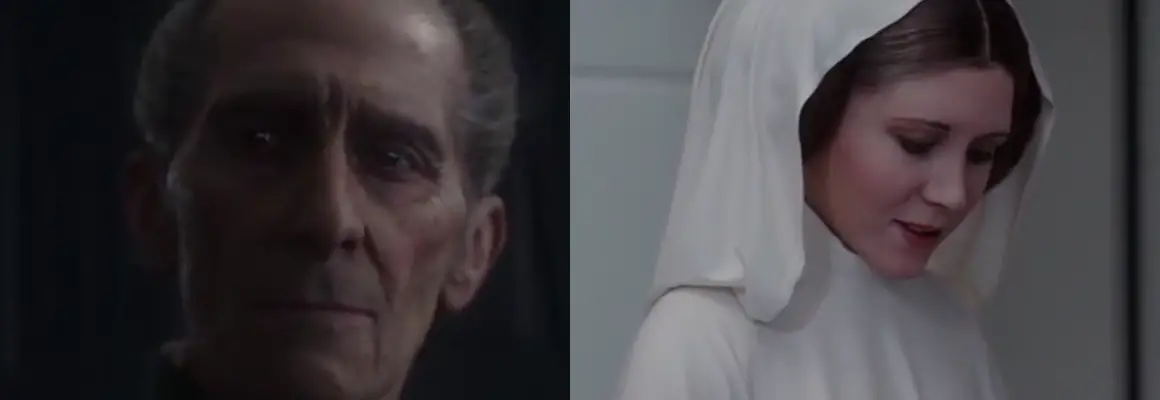

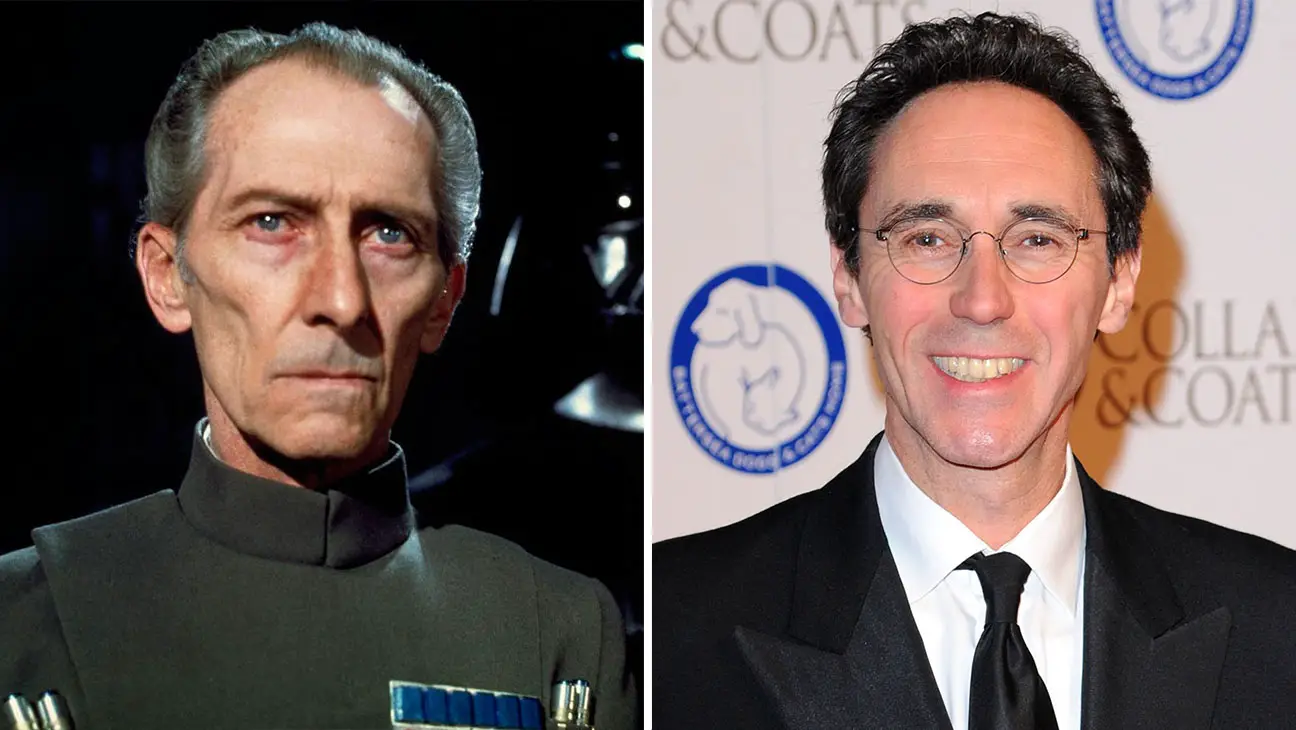

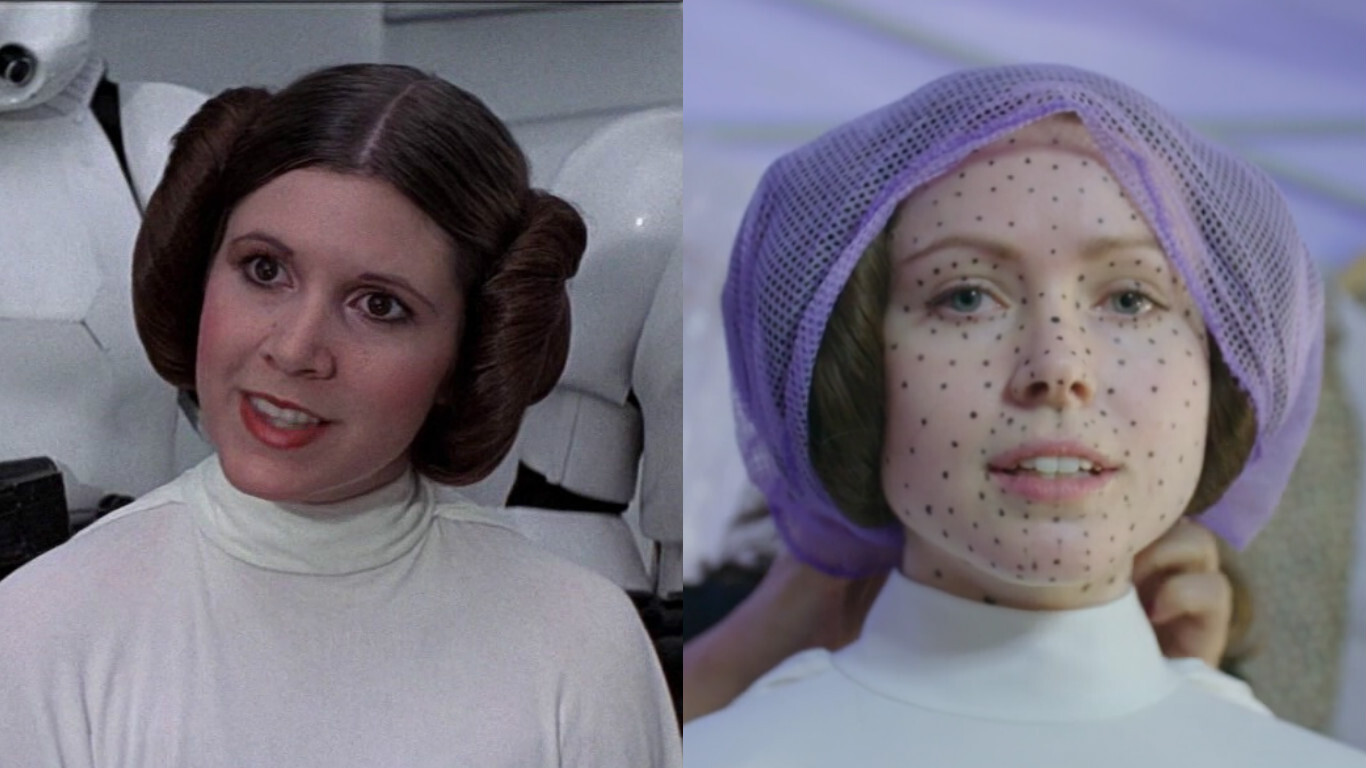

The characters of Grand Moff Tarkin and Princess Leia were created using VFX, though, in a sense, those parts were also recast. Guy Henry and Ingvild Deila were given digital makeovers to make them look like Grand Moff Tarkin, and Princess Leia respectively.

Bringing back Peter Cushing from the dead posed ethical and legal questions, as well as artistic challenges. Carrie Fisher gave her blessing for ILM to develop a CG version of the 17-year old princess. Leia only made a cameo appearance, while Tarkin played a major part in the film, making him more of a challenge for ILM.

This important task fell to special effects Jedi and ILM Chief Creative Officer, John Knoll, who also devised the plot for Rogue One. His team included Digital Character Model Supervisor Paul Giacoppo, Visual Effects Supervisor Nigel Sumner, and Animation Supervisor Hal Hickel.

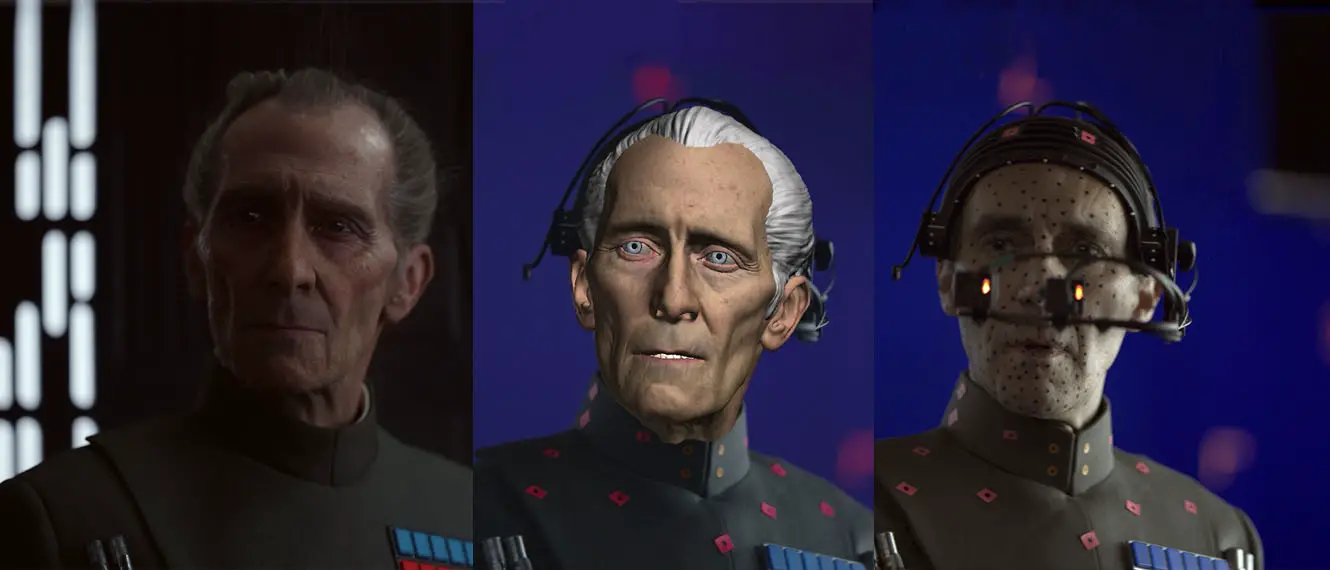

Grand Moff Tarkin

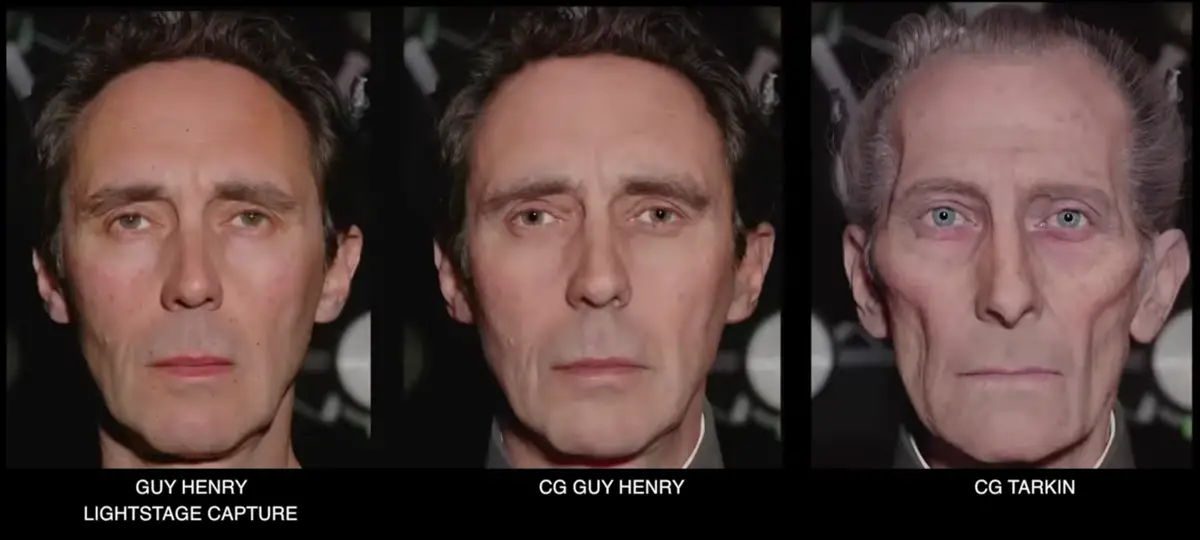

For Tarkin, ILM was not only creating a real digital human but also creating a CG “resurrection” of the late actor Peter Cushing, who died in 1994. Digital Tarkin almost has as many lines as the real Cushing did in A New Hope. He walks, talks, and interacts with real actors. Of course, the actors are actually interacting with actor Guy Henry on set, who did an extraordinary job of mimicking Cushing’s voice and body movements, even though his face doesn’t appear on a single frame of film. He was a stand-in for the digital Cushing. While both Henry and Cushing share a narrow facial structure, transforming Henry’s face into a lifelike digital Cushing was complex.

This transformation involved facial capture through Lightstage and Medusa, the use of Snap Solver to help with lip syncing, and animation to finish the result. ILM faced some difficult questions: How do you translate Henry’s facial movements (his phonemes) into the facial movements of Peter Cushing? And how do you turn a digital human into a major supporting character? The digital Tarkin would have almost as many lines as Cushing did in Star Wars: A New Hope.

Facial capture had been used extensively in turning actors into digital creatures from Bill Nighy’s turn as the squid-faced Davy Jones in Pirates of the Caribbean, and Mark Ruffalo’s Hulk in The Avengers. Both used the actors’ facial architecture to build these digital characters. Their physical likeness is built into the digital characters. Other actors had played CG characters that bore no resemblance to them–such as Andy Serkis’ Gollum and Lupita Nyong’o as Maz Kanata–but these characters did not require the level of realism needed for a digital human interacting with real actors on film.

Previous digital humans had been groundbreaking but existed in digital environments, or were too briefly seen to be placed under close scrutiny. Digital Tarkin would be different. All physical resemblance of Guy Henry had to disappear. He was a stand-in for a CG “resurrection” of Cushing.

This would be the first digital resurrection of a deceased person in a prominent speaking role. As such, digital Tarkin would need to possess a degree of photorealism when interacting with actors. While none of Henry’s facial features would be visible, the VFX artists did need a record of Henry’s skin reflectiveness in different lighting conditions as a reference for digital Tarkin.

Lightstage Captures Skin Reflective Qualities

Guy Henry went to OTOY in Los Angeles and sat inside Lightstage, a globe-like structure used to capture Henry’s diffuse and specular skin reflective properties. The structure, which has 300+ lights and cameras placed equidistantly around it, could simulate different lighting conditions and capture the effects on Henry’s face down to very fine skin surface detail.

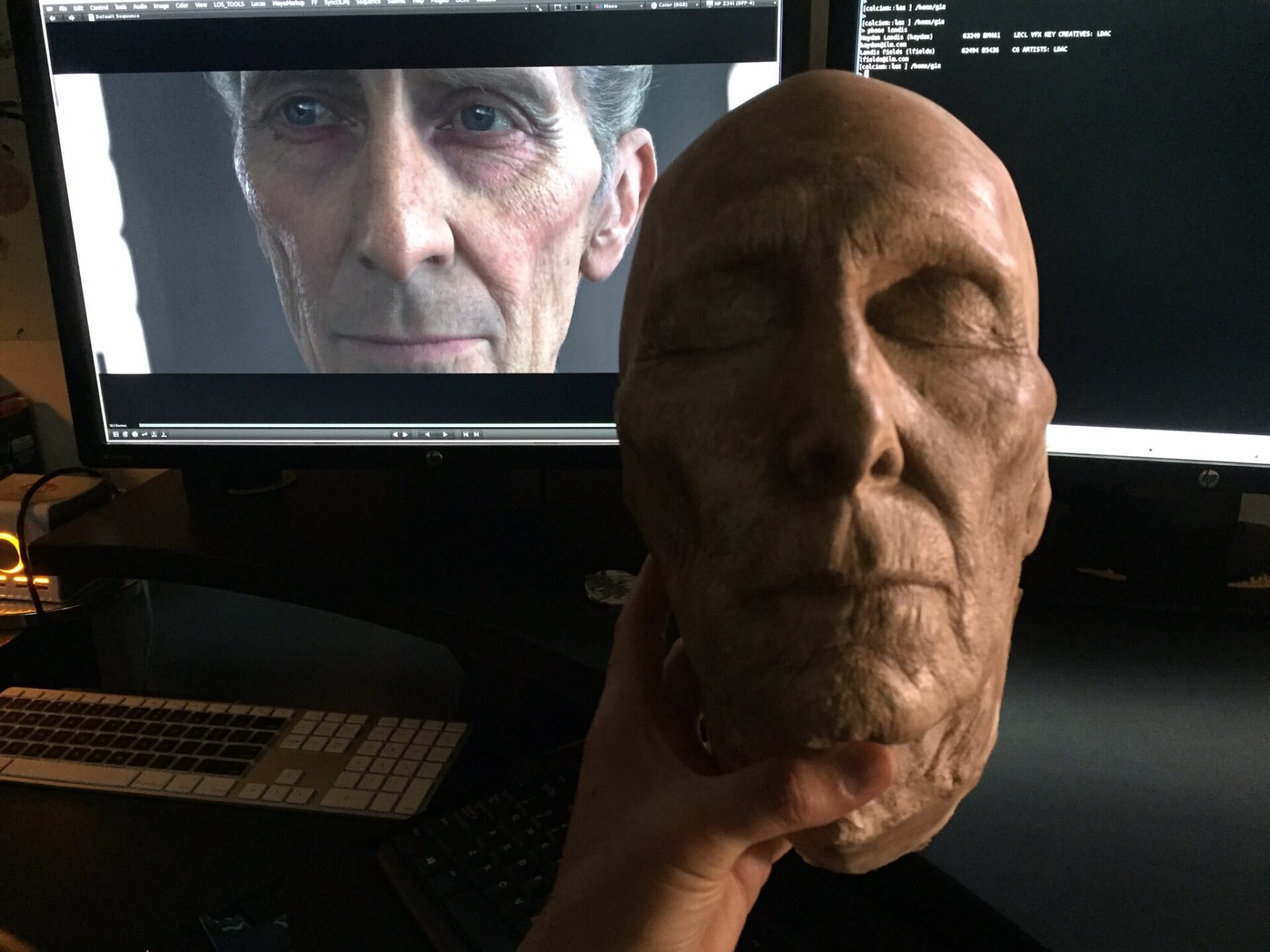

Comparing the scans made of actor Mark Ruffalo for Hulk with Guy Henry show the stark differences in approach. While Ruffalo’s face was captured onto a digital double, the lighting effects on Henry’s face were translated and captured on a digital face of Peter Cushing. A 3D digital head of Cushing was taken from a scan of plaster head made of the actor for the movie Top Secret! (1984), not long before he passed away.

Capturing a Facial Expression Library

From there Henry sat inside a Medusa rig which captured facial expressions or FACS. Henry pulled every conceivable type of facial expression and the transitions between them into a comprehensive FEZ library. The Medusa rig tracks changes in expressions per frame, including the movement of wrinkles, pores, and blood flow, and shininess of the skin, which is great for recording nuanced emotions and also dialogue.

So the digital scan of Cushing’s plaster head from 1984 gave a 3D voluminous digital model on which could be translated various lighting effects captured in Lightstage, and paired with the comprehensive FEZ expression library made in the Medusa rig.

The lip shapes during dialogue scenes were one of the most important aspects to get right. Tarkin is a digital human with a significant speaking part. The proprietary software in the Medusa rig registered lip shapes produced by spoken dialogue are called visemes, which are a set of static mouth shapes representing clusters of contrastive phonemes (e.g. /p, b, m/, and /f, v/).

The FEZ library allowed ILM to create any expression they needed by adding and combining scans or ‘blendshapes’. The key aspect of the FEZ library blendshapes is that a facial ‘rig’ can be provided to the artist that has sliders for moving between expressions. The facial rig not only allowed the digital face to move between expressions but the blendshapes could also be combined and mixed.

Performing With a Head-Mounted Motion-Capture Camera Rig

The next step was to connect this digital information with Henry’s on-set performance. The FEZ library blendshapes communicated with the head-mounted four-camera motion-capture rig worn by Henry. As Henry performed, the cameraman could see his performance played out as Cushing on a video monitor in real-time. The cameraman could, therefore, frame the digital Cushing as if he was performing live on set like any other actor.

But the blendshapes did not always match well with the tracking dots to produce a facial expression that mimicked Cushing. One reason is that the expression library can only be captured while facing forward in the Medusa rig. So when Henry moved on-set, the blendshapes could not really translate to the digital Cushing the right gravity and motion that moves the face during a performance.

Another reason is that every person uses their face differently. “He doesn’t smile like him,” Hickel told VFXBlog. “He doesn’t form the phonemes the same. So while we could get a great performance from Guy and we could apply that to Tarkin and get a realistic-looking movement, it lacked Tarkin’s likeness. We had high realism, but we had problems with likeness. It looked like Peter Cushing’s cousin or something. So we’d have to then adjust the motion to the face. The animation team would have to adjust it – if he did a smile, say, to get it to look like a Tarkin smile or a Peter Cushing smile.”

Syncing Tarkin’s Features With SnapSolver

ILM resolved this issue with SnapSolver, a tool innovated on Teenage Mutant Turtles by VFX duo Kiran Bhat and Michael Koperwas, and developed further by Brian Cantwell and Paige Turner when they worked on Warcraft. Both Warcraft and Teenage Mutant Ninja Turtles were filmed simultaneously. These two teams shared information back and forth and really began the development of a complex facial pipeline that would be used on successive ILM productions. All four joined forces to render faces like Maz Kanata on Star Wars: The Force Awakens, but with no alien face to hide behind, nothing compares to syncing features on a digital human face.

SnapSolver adds a mesh that follows the contours of the face, ignoring the tracking dots and individual facial features. It’s that extra layer of information that gives a nuanced realistic performance. This mesh could be programmed to make tiny, subtly realistic movements over the entire face. This mesh could be tweaked to lean more into the visemes of Cushing’s original performance. For instance, when Cushing made an “aah” sound his upper lip moved, but Henry’s did not. SnapSolver allowed the animators to add this fine detail to correct lip-sync. Without this tweak and similar tweaks to other features like eyebrows and cheeks, the recreation of Tarkin would have less credibility.

Lighting Tarkin

Once the animation was complete, ILM rendered Tarkin’s head in RenderMan RIS, which matched the way light behaved on Henry’s skin and hair in the Lightstage scans with the light captured on set during filming. Furthermore, director Gareth Edwards was inspired by the lighting in Ridley Scott’s Alien and naturalistic lighting in documentary filmmaking. This influenced the way DOP Greig Fraser lit and shot Rogue One, though some Tarkin scenes are influenced by the way DOP Gibert Taylor shot A New Hope in 1977.

Emulating the lighting in A New Hope was actually very difficult. The lighting was much harsher mainly due to the ISO in film of the 1970s being much lower. The lower ISO required heavy makeup, which to match in Rogue One, would have required all other actors to use in order to match.

In 50% of the effects shots, Henry’s body and head were replaced with a full digital Tarkin to show light falling across the whole body. “We did a full digital replacement using Guy as a guide and in those shots it also gave us the ability to reframe if we wanted to,” explained Nigel Sumner.

Tarkin proved difficult, but it was worth it, just to see Peter Cushing reprise one of his more famous roles one last time.

Princess Leia

ILM used a more simplified process for Princess Leia’s brief digital cameo. Her double, Ingvild Deila, had 100 reference dots on her face instead of the camera rig. Five close-up cameras captured the movement of her face, and the digital model of Carrie Fisher’s face was painstakingly mapped and adjusted with the footage. A SnapSolver overlay added more humanity to the digitized face. Her one shot was considered one of the most labor-intensive shots in the history of ILM.

The response was mixed. Many were thrilled to see the return of Tarkin and a 17-year-old Princess Leia, but many still think these digital characters still live in uncanny valley.

Latest posts by Daniel Rennie (see all)

- Heart of Stone: Female Bond-Clone References Moonraker, On Her Majesty’s Secret Service and Other Bond Films - February 8, 2024

- How Editor Peter Hunt Saved ‘From Russia With Love’ - January 27, 2024

- This Periodic Table of Tropes Is The Ultimate Storytelling Infographic - January 22, 2024